Graphics Processing Units (GPUs)

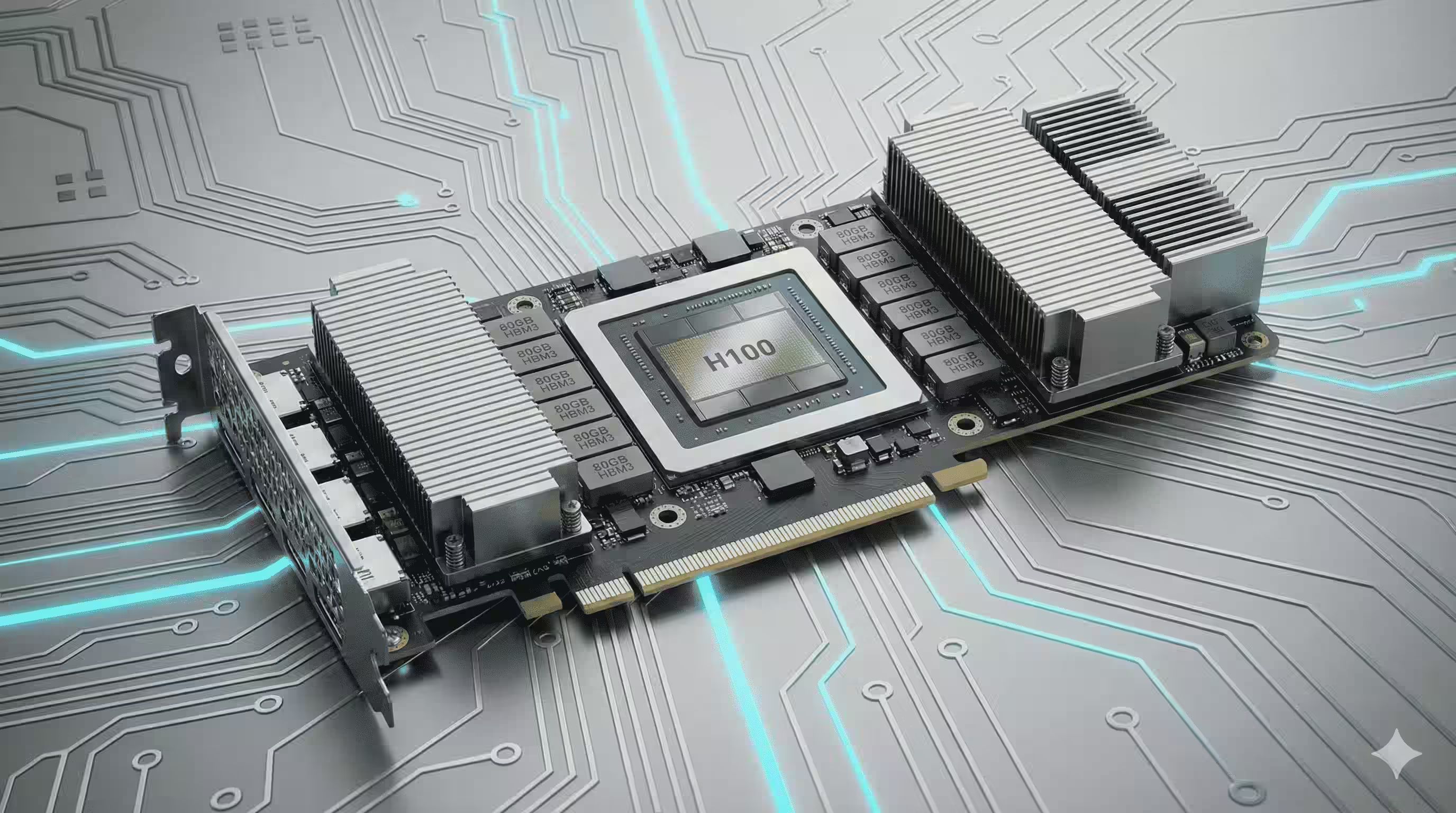

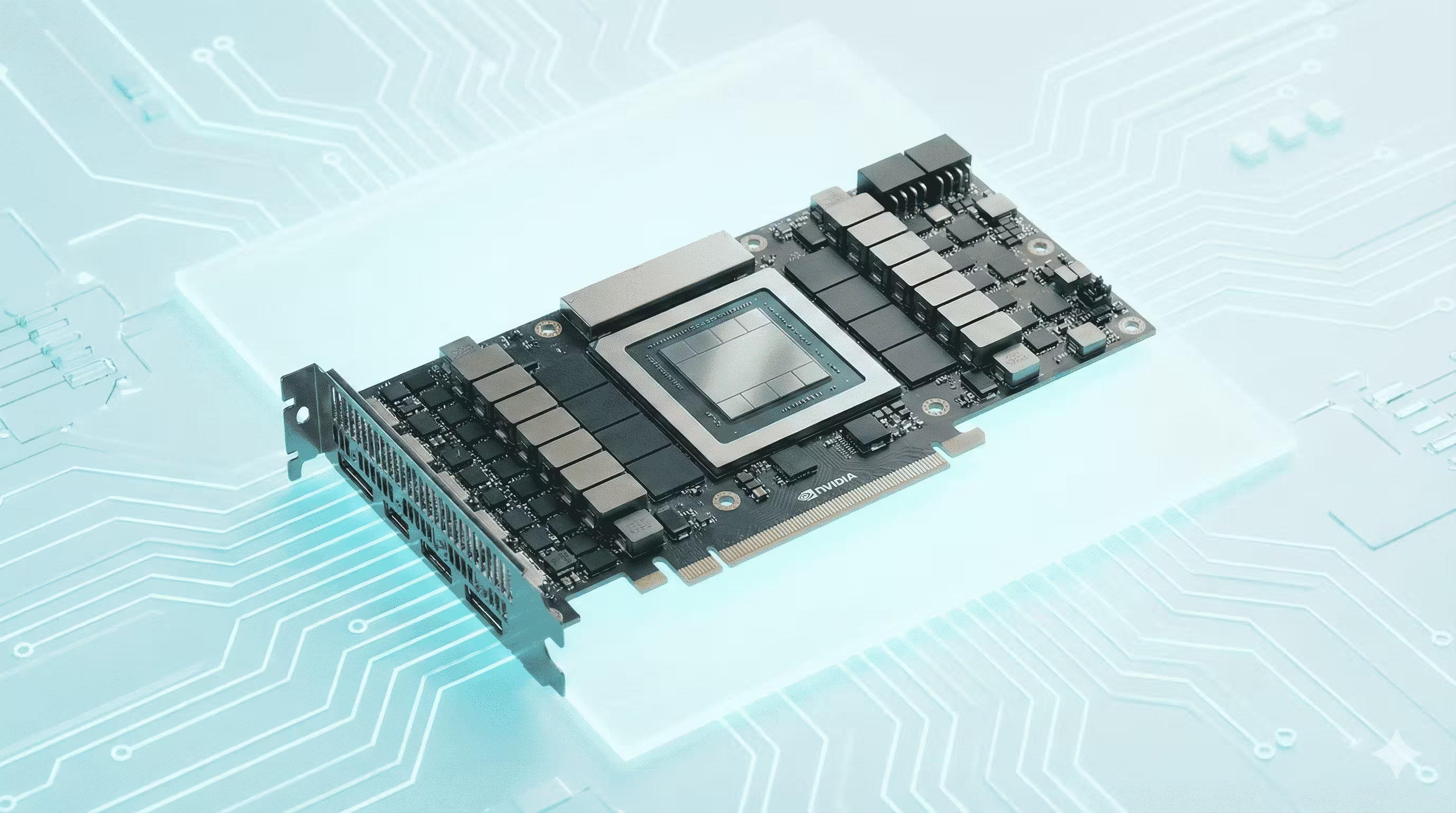

NVIDIA H100

The flagship AI accelerator for large language model training and inference. Hopper architecture with 80GB HBM3 memory.

- 80GB HBM3 Memory

- 3.35 TB/s Memory Bandwidth

- PCIe Gen5 / NVLink

NVIDIA A100

Industry-standard for AI training and HPC workloads. Ampere architecture with proven reliability.

- 40GB/80GB HBM2e

- Multi-Instance GPU (MIG)

- TensorFloat-32 Support

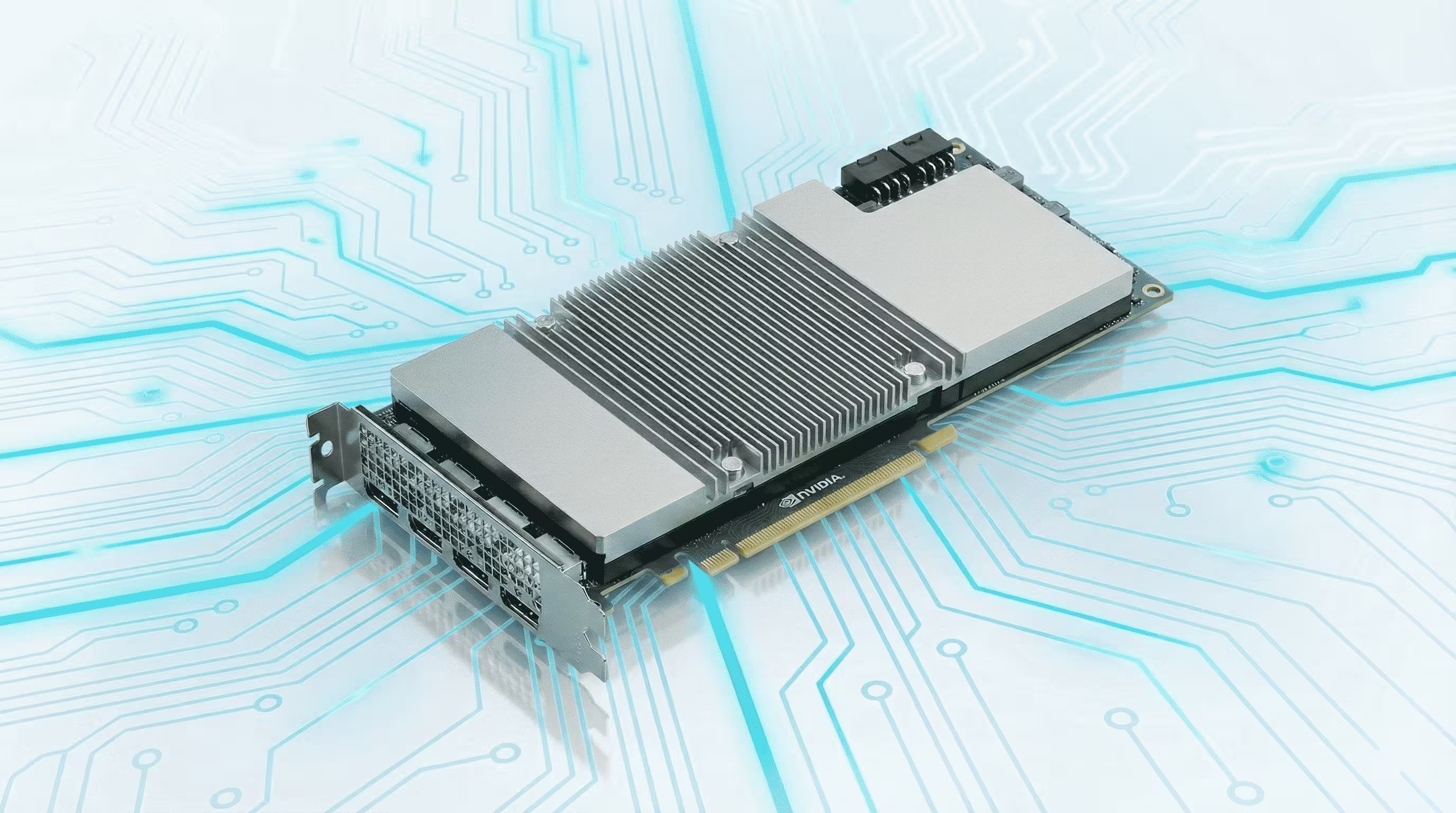

NVIDIA RTX 6000 Ada

Professional workstation GPU for AI development, rendering, and visualization workloads.

- 48GB GDDR6

- Ada Lovelace Architecture

- Workstation Certified